NINON LIZÉ MASCLEF

Media Artist & Research Scientist

2020-2021

Perceptually-grounded metrics to evaluate the state-of-the art automatic lyrics-to-audio alignment models.

role

Experiment design, data analysis, full stack development

conference

Ninon Lizé Masclef, Andrea Vaglio, & Manuel Moussallam. (2021). User-centered evaluation of lyrics-to-audio alignment. Proceedings of the 22nd International Society for Music Information Retrieval Conference, 420–427

keywords

music information retrieval, psychology, evaluation metrics, user-centric, research

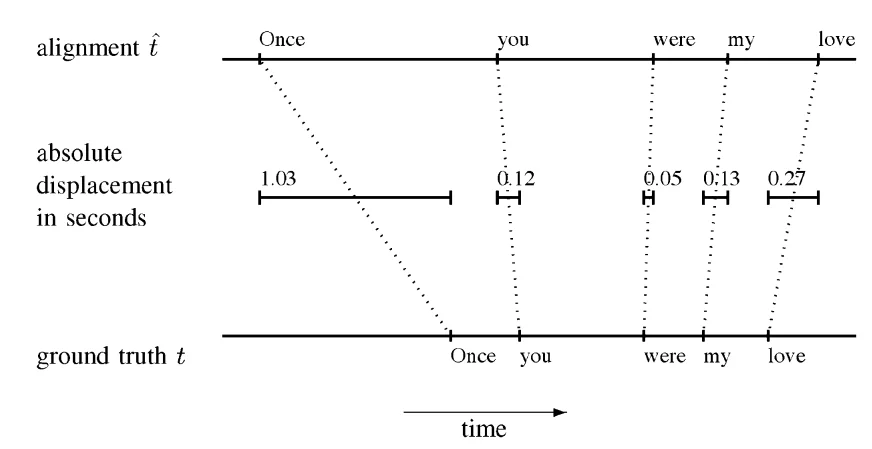

Lyrics-to-audio alignment is a task aiming to synchronize lyrics text units to the time position of their appearance in the audio signal. The main application of this task are karaoke, navigation within songs and explicit lyrics removal. Among the most commonly used metrics to evaluate this task is the percentage of correct onsets (PCO), which compares the predicted alignment t hat with the ground truth alignment t using a tolerance window of 0.3 seconds, supposed to represent the maximum displacement human can tolerate.

Calculation of the performance metrics

Like most Music Information Retrieval metrics, PCO is defined as music and user independent. This is quite convenient from a practical researchers standpoint, but now that state of the art systems are getting better, further improvement will have to be measured differently, taking into account not only the magnitude of errors but how perceptually noticeable they are.

Psychological insights

Looking at the state of the art of psychology research of synchrony perception, I found that the perception of synchrony relies both on global and local rhythmic context. Individuals use music and linguistic cues to perceive rhythm and they tend to tolerate more audio lagging than visual lagging.

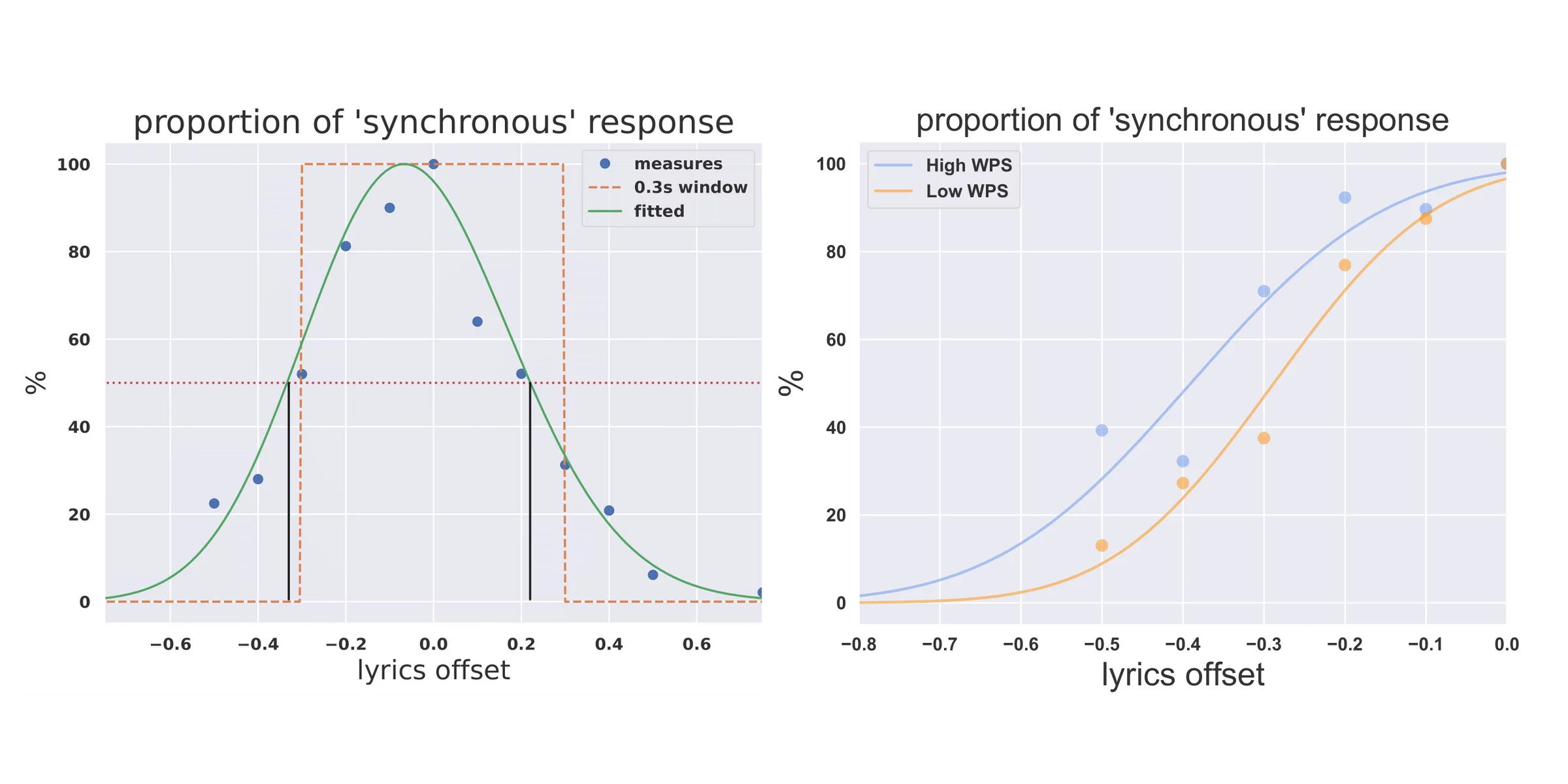

The PCO metric assumed the synchrony perception to be symmetric as well as independent of musical linguistic features of a given song. By contrast, I made the hypothesis that synchrony perception is asymetric. Namely, that audio lagging is preferred over lyrics lagging. I assumed global and local rhythmic factors have an impact on synchrony perception.

Experiment Design

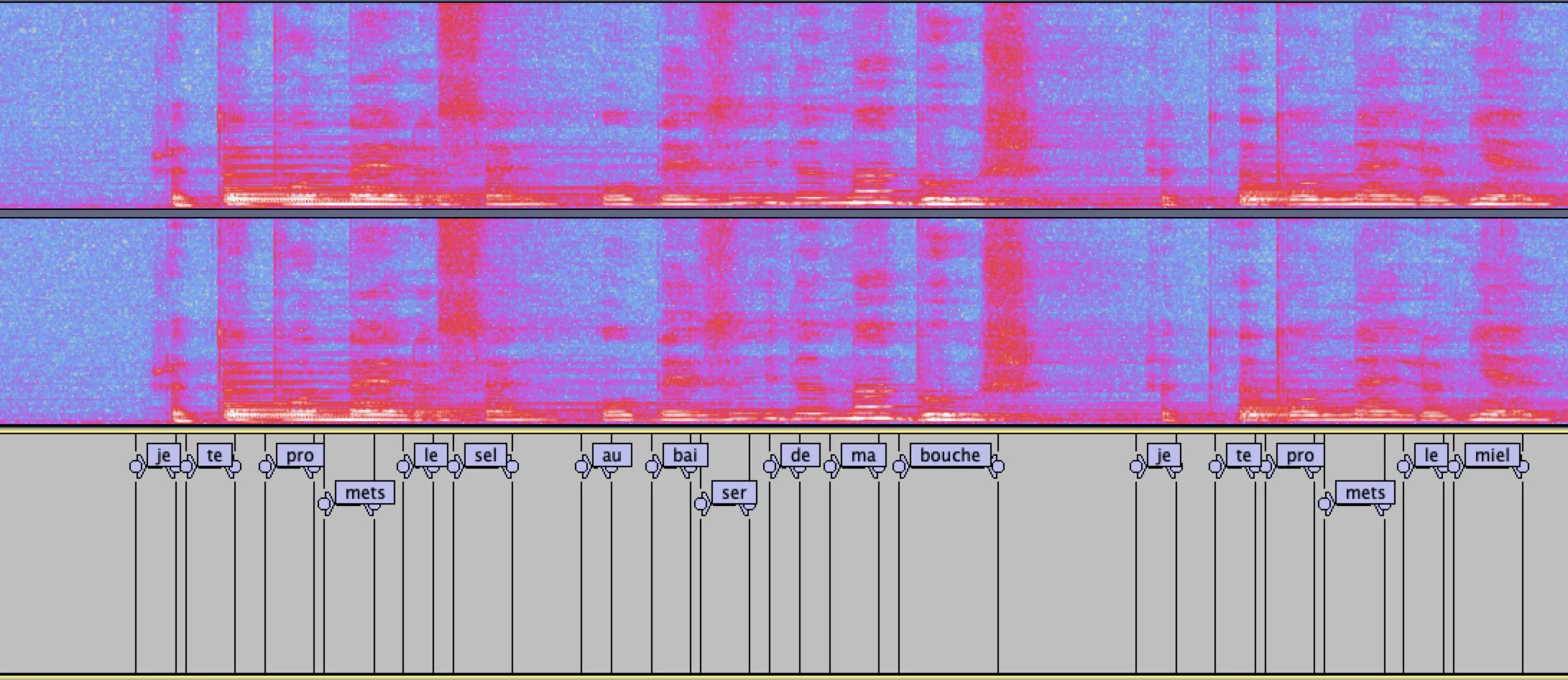

In order to test those hypothesis, I designed two experiments inspired from the main application of this task: Karaoke. During this experiment, alignment errors are generated by adding an offset betIen lyrics audio with values ranging from minus one second two plus one second. Then participant annotated their perceived quality of alignment reported in a ternary choice answer: If the lyrics were ahead, lagging or synchronous.Results

Looking at the respective thresholds for lyrics ahead and lyrics lagging, it is clear that individuals prefer lyrics ahead over lyrics lagging. Moreover, participants are more tolerant to lyrics ahead in high world rate than in low word rate.

Implications for evaluation metrics

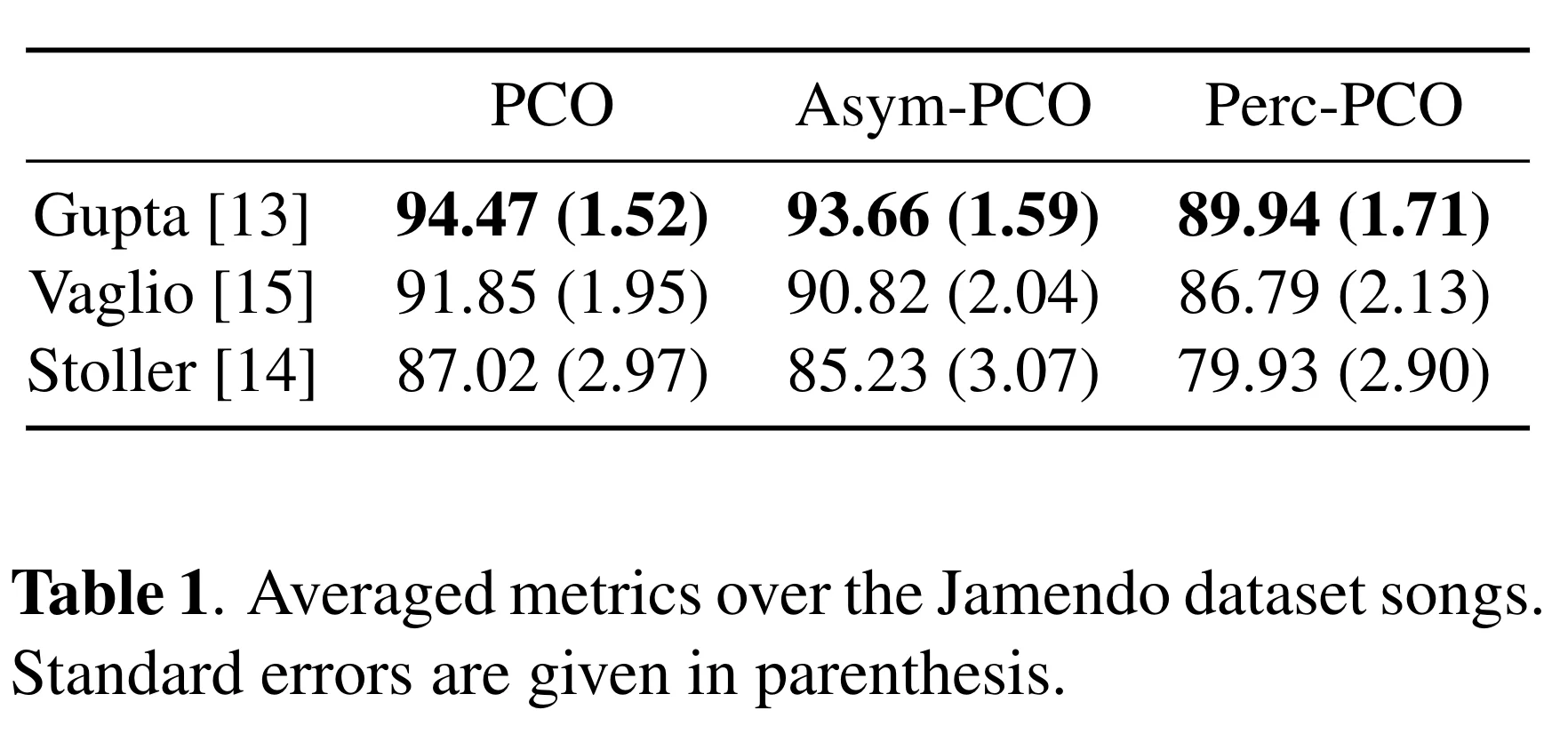

Finally, from these results I proposed a perceptually grounded metric that captures the relative importance of alignment errors according to human perception. I evaluated three state of the art automatic lyrics alignment models with those three versions of the PCO metric. Interestingly, there appears to be little difference between using the standard PCO window and a slightly shifted one. However, scores for the perceptual PCO are much lower.

The paper "User centered evaluation of lyrics-to-audio alignment", co-written with Andrea Vaglio and Manuel Moussallam is available here. I presented it at International Society for Music Information Retrieval (ISMIR) conference 2021.